Objectives

Within this project we investigate deep learning architectures (neural networks comprised of large number layers) for human activity recognition, particularly from wearable sensor data.

This is motivated by:

- The necessity to increase human activity recognition performance for an ever larger number of activity classes to address problems of societal relevance

- The desire to analyse what may be fundamental "units of behaviour" that make up human activities as a way to design more scalable activity recognition systems

- The requirement to decrease engineering effort in the design of features for activity recognition.

Outcomes

Current outcomes include:

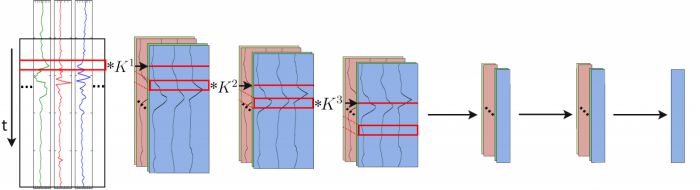

- A generic deep network comprising convolutional layers and dense layers based on LSTM cells suitable for multimodal sensor data and capable of seamlessly fusing data

- 9 percentage point increase in F1 score over the best published results, reported on a recognised human activity recognition dataset!

Publications

- Ordonez Morales, Francisco Javier and Roggen, Daniel (2016) Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition. Sensors, 16 (1). pp. 1-25. ISSN 1424-8220

- Ordonez Morales, Francisco Javier and Roggen, Daniel (2016) Deep convolutional feature transfer across mobile activity recognition domains, sensor modalities and locations. In: 20th International Symposiumon Wearable Computers (ISWC) 2016, 12-16 September 2016, Heidelberg, Germany.

People

Francisco Javier Ordonez Morales; project supervised by Daniel Roggen.