Sussex-Huawei Locomotion (SHL) dataset - a versatile annotated dataset for multimodal locomotion analytics with smartphones and body-worn camera

For additional information: refer to the dedicated SHL Dataset website.

University of Sussex-Huawei Locomotion (SHL) is a highly versatile and richly annotated dataset. The SHL dataset contains multi-modal locomotion data (smartphone sensors and body-worn camera), which was recorded over a period of 7 months by 3 participants engaging in 8 different modes of transportation in real-life setting in the United Kingdom: Still, Walk, Run, Bike, Car, Bus, Train, Subway (Tube).

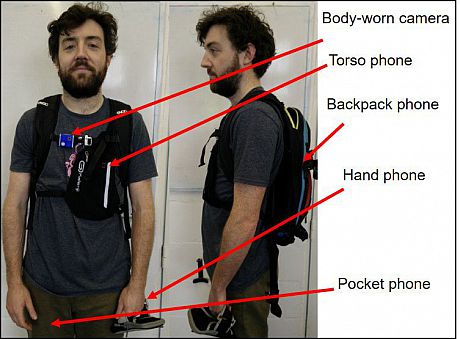

It was collected by 4 smartphone sensors and body-worn camera (see picture below).

The SHL dataset is suitable for a wide range of studies in fields such as transportation recognition, mobility pattern mining, activity recognition, localization, tracking, and sensor fusion.

The SHL dataset contains 750 hours of labelled locomotion data: Car (88 h), Bus (107 h), Train (115 h), Subway (89 h), Walk (127 h), Run (21 h), Bike (79 h), and Still (127 h).

Four HUAWEI Mate 9 smartphones were used for the data collection. They were placed on body locations where people are used to wearing phones: hand, torso, backpack, and trousers’ front pocket. The phones are equipped with a custom data logging application (a screen shot is given in the picture below), which logs 16 sensor modalities: accelerometer, gyroscope, magnetometer, linear acceleration, orientation, gravity, temperature, light sensor, ambient pressure, ambient humidity, location, satellites, cellular networks, WiFi networks, battery level and audio. For each sensor, we measured with the highest respective sample rate as offered by the implementation of the Android services. The Android application synchronizes the phones over a Bluetooth connection, shows status information, and allows the user to annotate the data on the master phone.

On the Master device, the user can choose 8 primary categories: Still, Walk, Run, Bike, Car, Bus, Train, Subway (Tube). Additionally, for some of the categories the user can choose the location (inside or outside) and the posture (stand or sit), which gives 18 combinations in total. These annotations are send to the other phones for consistency.

Additionally, the participants wore a front-facing body camera, which was used to verify label quality during post-processing, and as part of the dataset it will allow vision-based processing, such as object recognition. The camera was set to take pictures every 30 seconds, which is frequent enough to reconstruct the measurement process of the course of the day and is less invasive to the user’s privacy than a full recording.

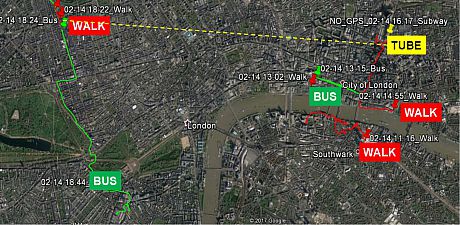

An example of the GPS data collected for a visit in London is shown in Figure 1.

GPS -London

GPS -London

The large number of included sensors at different body locations, the diverse set of activities in different areas, and the precise annotation, makes this dataset a valuable foundation for various research fields, e.g., automatic recognition of transportation modes and activities, detection of social interaction, road conditions detection, traffic conditions detection, localization and sensor fusion. Further applications are expected based on the collection sound recordings and from the camera data, e.g., object and activity recognition. The GPS and WiFi data have valuable applications for indoor localization and can serve as baseline for sensor-based localization.

Once the dataset is fully collected and currated, it will be available for download. We expect to publish it till the end of 2017.

For early access of the dataset, please contact: Dr. Daniel Roggen.

For additional information: refer to the dedicated SHL Dataset website.