Technology you can touch and smell, but not see

Our five senses help us make sense of the world. Yet current technology generally relies mostly on our visual and auditory senses.

Smell navigation?

Some researchers from the University of Sussex’s Creative Technology Group are exploring how future technology can become more interactive and immersive by using all our senses.

Distracting a driver’s eyes with warning signals can have fatal consequences. Researchers are exploring whether our sense of smell could communicate information to drivers whose eyes and ears are already overloaded with information. “Maybe we can reduce some of their other information or find a niche where smell can be used to convey information to them,” said Marianna Obrist, a Reader in Interaction design at the University of Sussex.

While autonomous car drivers should remain alert and keep their eyes on the road, clearly not all drivers follow the rules. The first death with a driverless car occurred in 2016; the driver may have been watching a movie and not looking at the road ahead when the fatal accident occurred. Obrist’s lab has carried out research that shows people are able to establish links between a particular driving-related message, such as ‘slow down’, and a particular scent. The olfactory technology could be used in autonomous cars to provide warning signals to drivers.

“What we have seen with the Tesla incident is that, despite several warnings, [the driver] didn’t get his attention back on the street,” said Obrist. “We know that our sense of smell is very powerful in getting our attention even when we are not looking.”

Metamaterial bricks are shaping sound in new ways

Creative Technology group researchers are also giving Dr Who and his sonic screwdriver, a versatile tool the Doctor unfurled to open doors and blast away enemies, a run for his money by shaping sound in new and innovative ways.

They have developed a sound-shaping material that controls acoustic fields using three-dimensional units, which bend, shape and focus sound waves that pass through it. These small, pre-manufactured units, which they call ‘metamaterial bricks’, can be stacked into layers to focus and steer acoustic waves. Each brick delays the sound that goes through it. “It’s like a maze,” said Gianluca Memoli, a Lecturer in Novel Interfaces and Interactions at the University of Sussex, who led the study. “It takes time to go through a maze, and that’s what happens when sound goes through a brick. There are bricks that have longer mazes and bricks with smaller mazes. Of course, you spend more time going through a longer maze. If you put together bricks with a longer maze and bricks with a shorter maze then this creates an [acoustic] shape as they arrive at different moments.”

Controlling acoustic fields is generally done with fixed lenses or phased arrays, technologies that are often bulky and expensive. The bricks could prove a much cheaper option as they can be 3D printed or otherwise assembled using any material at all, including wood, plastic, glass or even paperboard. “The bricks can be reassembled and controlled to form any acoustic field that you can imagine. It’s a do-it-yourself kit that you could use at home to shape your own sound,” said Memoli.

Large versions of the metamaterial bricks could direct or focus sound to a particular location and form areas of silence or audio hotspots, as done with directional loudspeakers. In a car, this would mean the driver could be listening to GPS instructions while the passenger listens to music. In a disco, a metamaterial sleeve over a loudspeaker could create an area of silence where you could go chat with your friends.

Much smaller versions of the metamaterial bricks could be made for medical and industrial applications using ultrasound, high frequency sound waves which are generally higher than humans can hear, but can be heard by some animals. In these applications, acoustic waves move through tissues and solids just like audible sound moves through air. A metamaterial layer tailor-made to treat a patient could be fitted onto an ultrasound device and used to destroy tumours deep within the body by bombarding the unwanted cells with ultrasound waves. The metamaterial bricks have been developed jointly by the University of Sussex and the University of Bristol. There are plans to establish Metasonics Ltd in the next couple of months.

Ultrahaptics: human interface of the future?

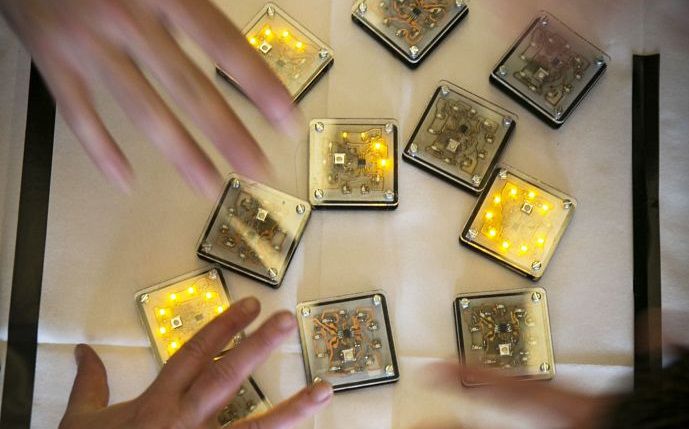

Ultrasound technology can also be used to give users the physical sensation of touching three dimensional objects floating in mid-air. Professor Sriram Subramanian, Professor of Informatics at the University of Sussex, co-developed the unique haptics technology and co-founded a spin-out company, Ultrahaptics.

In this case, high frequency sounds or ultrasonic waves are transmitted through the air from a specially designed pad. If they are precisely synchronised, the pressure from all the different waves added together can create a localised area of very high pressure, which is strong enough to be felt, say, on your hand or fingertips. By changing the frequency of modulation, researchers can change the virtual sensation felt by users, creating the feeling of tiny bubbles popping, rain drops, a stream of liquid or the outlines of floating three-dimensional shapes. The Ultrahaptics pad combines ultrasound emitters with motion sensor technologies, which track the hand so that sensations are sent where needed. The software can be programmed to find your hand from a distance as far as six feet away and direct sound to it, projecting sensations even onto a moving hand.

In a car, when your eyes are busy watching the road, the driver’s hand could be tracked and and a dial to change the radio station would come to you. Land Rover have already seen the technology’s potential and is working with Ultrahaptics to investigate a mid-air touch system. In places like ATMs or hospitals users could touch buttons created in mid-air in order to reduce the spread of infections. Ultrahaptics has launched a TOUCH dev kit, an important step to getting products to market as companies can use the kit to evaluate the technology. The company has also received funding from Woodford Investment Management, and IP Group Plc.

Understanding how humans process sensory information

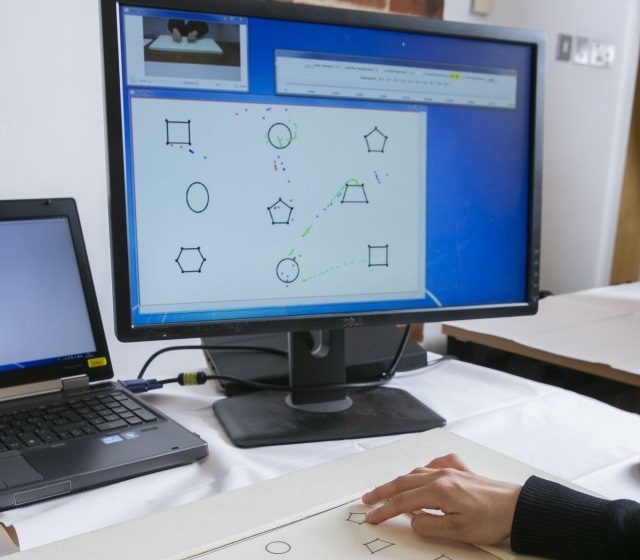

Cognitive scientists within the Creative Technology Group are researching how people perceive and process different sensory inputs. These insights will help inform how multi-sensory interactions, interfaces, and devices will be designed. Take, for example, a graph in a physics book that plots out a function with a curve. A visually impaired person may not be able to see the diagram, but can use their fingertips to get the encoded information from a tactile version of it. However, an effective tactile graphic does not necessarily look the same as a visual one.

"While most of the technologies we use today are focused on sight, other sensory inputs may require information to be represented differently, especially when we want to enable learning and problem-solving using multiple senses," says Ronald Grau, a contributor to the Cognitive Science of Tactile Graphics project led by Peter Cheng, Professor of Cognitive Science at the University of Sussex. The researchers on the project are looking at the strategies that blind or visually impaired people employ to solve different tasks using tactile graphics, in order to learn more about how the human cognitive system processes that information. “We are only just beginning to understand how cognition works with multiple senses”, said Grau. "It is like discovering a whole new world of complex relations that was previously hidden from our view.” It is hoped that insights from cognitive science will create the foundations for completely novel ways of interacting with technology.

Enhancing virtual reality experiences

All of these technologies could also make virtual reality experiences more immersive. A scent diffuser attached to a virtual reality mask could help create a virtual rainforest that smells like the real thing. Adding tactile feedback would give video game players the ability to brandish a virtual sword or open a virtual door, while metamaterial-based technologies could help one player in one virtual space experience different sounds from another player.

Clearly, Dr Who’s sonic screwdriver needs to start catching up with reality.

Author: Suzanne Fisher-Murray

Date published: 3 October 2018

Contact us

Research and Enterprise Services

research@sussex.ac.uk

+44 (0) 1273 877 613