Research

Find out about our research interests and expertise.

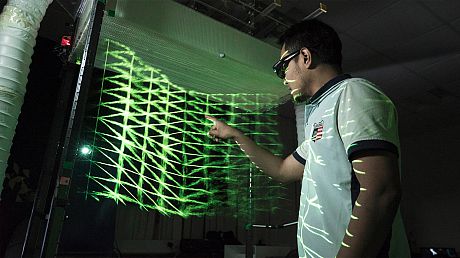

Our Creative Technology research is concerned with the interfaces between humans and digital technology and how these are changing. We investigate interaction in the broadest sense, considering it in relation to digital technologies, connected physical artifacts, people’s experience and their practices with mobile, immersive, ubiquitous and pervasive computing.

Find out about our research interests and expertise.

The CTG's research outputs.

Find out about our job openings, or how to collaborate with us.

All the people behind the Creative Technology Group.

The Head of the Research Group is Dr Gianluca Memoli.

G.Memoli@sussex.ac.uk

If you have a specific inquiry, you can also search for an interlocutor on our People page.

If you want to know about our current openings, refer to our Join us page.