Graham Hole’s research interests:

The structural basis of face recognition:

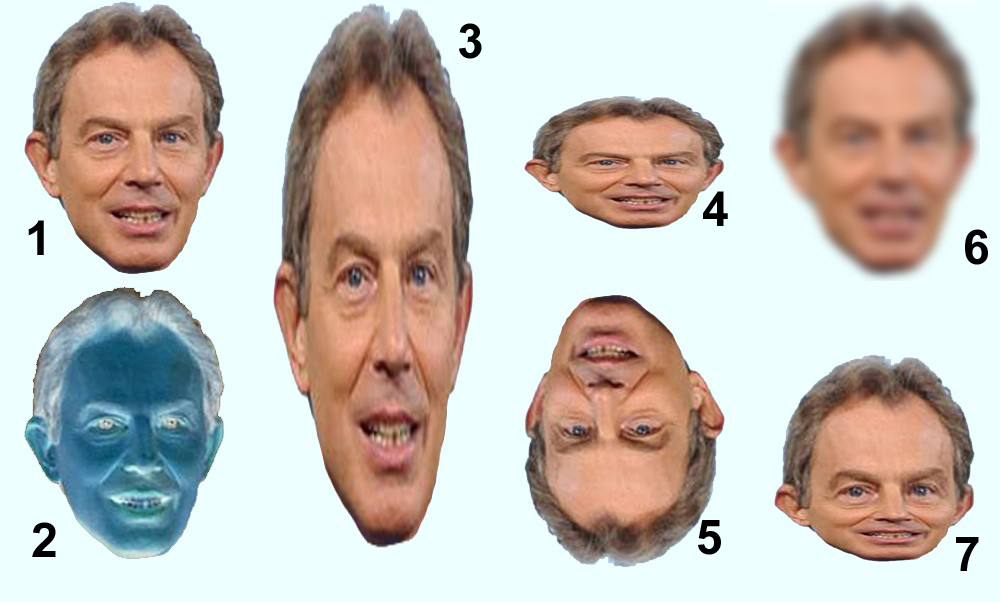

How do we recognise faces? What information from a face do we use, in order to do this? Although faces can often be recognised on the basis of individual features, face recognition seems to depend heavily on the "configural" properties of a face (the interrelationship between the facial features). Blurring a face (6) largely removes featural detail from a face, and yet even faces that have been very blurred remain quite recognisable. However people can recognise faces as easily when they have been stretched or squashed, as when they are normal - as long as these transformations are applied uniformly to the entire face, as in examples (3) and (4) above. In (7), stretching has been applied only to the top half of Tony's face, and this makes him hard to recognise. Experiments using images like these suggest that "configural processing" involves more than a straightforward comparison of distances between features (e.g. the eye separation compared to the distance between the top of the nose and the chin), since all of these lengths are disrupted by stretching or squashing. Paradoxically, there are manipulations which make face recognition quite difficult even though they leave a face's spatial properties intact (e.g. photographic contrast reversal (2), and inversion (5)).

Numerous studies have suggested that familiar and unfamiliar face recognition differ in various ways: research on eyewitness testimony shows that we are poor at recognising a face that has been seen only briefly before. Unfamiliar face recognition seems to be heavily tied to the particular view of the face that was seen. People are poor at coping with changes in lighting, viewpoint and expression. In contrast, familiar face recognition is much more tolerant of such things. In my current research, I'm interested in seeing to what extent people can cope with manipulations such as stretching when they are applied to unfamiliar faces. The short answer is: "just about as well as they do with familiar faces". My current hunch is that faces are "normalised" (preprocessed to a standard format, in terms of things like orientation and proportions) before recognition is attempted, and at the moment I'm investigating this issue using distorted faces.

With Victoria Bourne, I've co-authored a textbook on face processing: "Face Processing: Psychological, Neuropsychological and Applied Perspectives", published by Oxford University Press. (It also covers developmental aspects of face processing too, but that would have made the title even more unwieldy!)

The psychology of driving:

For a long time, I've been interested in the perceptual and attentional aspects of driving. Driving is an extremely demanding activity from a perceptual point of view: drivers have to make rapid decisions on the basis of visual input, such as emerging from a junction without hitting the oncoming traffic. Occasionally failures of detection occur - so-called "looked but failed to see" errors, such as when drivers pull out from a junction into the path of a cyclist or motorcyclist. I'm interested in why these occur, and what can be done to avoid them. You might think that the answer's obvious - that they occur because two-wheelers are hard to see because they are small. However, this is not the explanation. These kinds of accidents usually occur when the motorcyclist or cyclist is close to the emerging vehicle - too close for anyone to take action to redeem the situation. Close-up, physically-small things produce big images on the retina, so at the time that a driver pulls out in front of a two-wheeler, the latter is casting a pretty big image on the driver's retina, and should therefore be readily detectable. In any case, "look but failed to see" accidents can happen with objectively-conspicuous vehicles too - a few years ago, we investigated accidents in which people drove straight into parked police cars and then claimed not to have seen them!

My more recent research is on the effects of using a mobile phone while driving. How does this affect a driver's ability to detect hazards, etc.? Research around the world during the past 15 years or so has consistently shown that hands-free phones are just as bad as hand-held phones as far as driving is concerned. Phones affect driving not so much by making it hard to control the car (although obviously holding a phone doesn't exactly help with steering and using the indicators!) but by taking the driver's attention away from the outside world. Drivers who are using a phone have a restricted breadth of attention - they show a kind of "tunnel vision" compared to undistracted drivers. They use their mirrors less and they are less likely to notice road signs.

This is a good opportunity to plug my book, "The Psychology of Driving", which covers perceptual and attentional aspects of driving, and reviews the scientific literature on mobile phones and other in-car technology. It also discusses research on the effects on driving of drugs, age, fatigue and inexperience (the four big killers on our roads today).

ISBN 0-8058-5978-0. Published by Lawrence Erlbaum, October 2006.

For a list of my publications, click here.